Batch exports can be used to export data to a BigQuery table.

Setting up BigQuery access

To set up the right permissions for a batch export targeting BigQuery, you need:

- A Service Account.

- A dataset which has permissions allowing the service account to access it.

Here's how to set these up so that the app has access only to the dataset it needs:

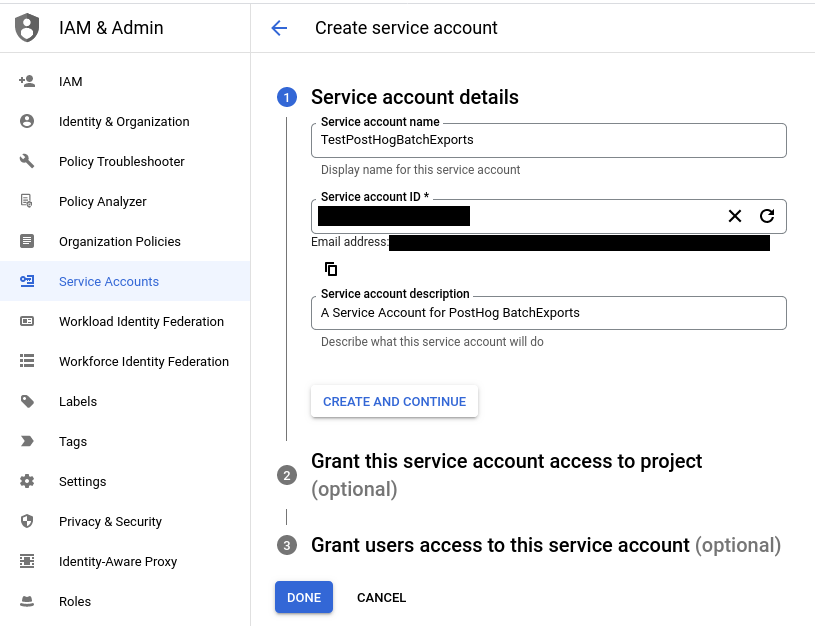

- Create a Service Account.

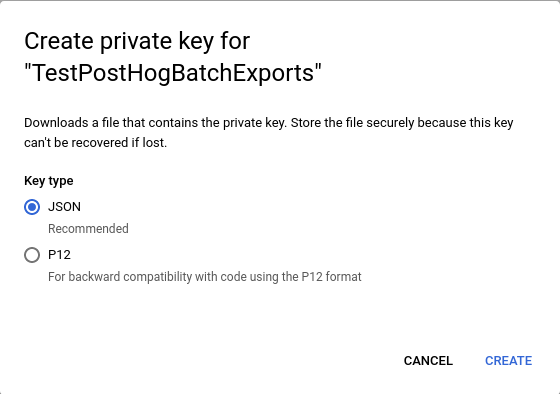

- Create a key for the Service Account you created in the previous step.

- Save the key file as JSON to upload it when configuring a batch export.

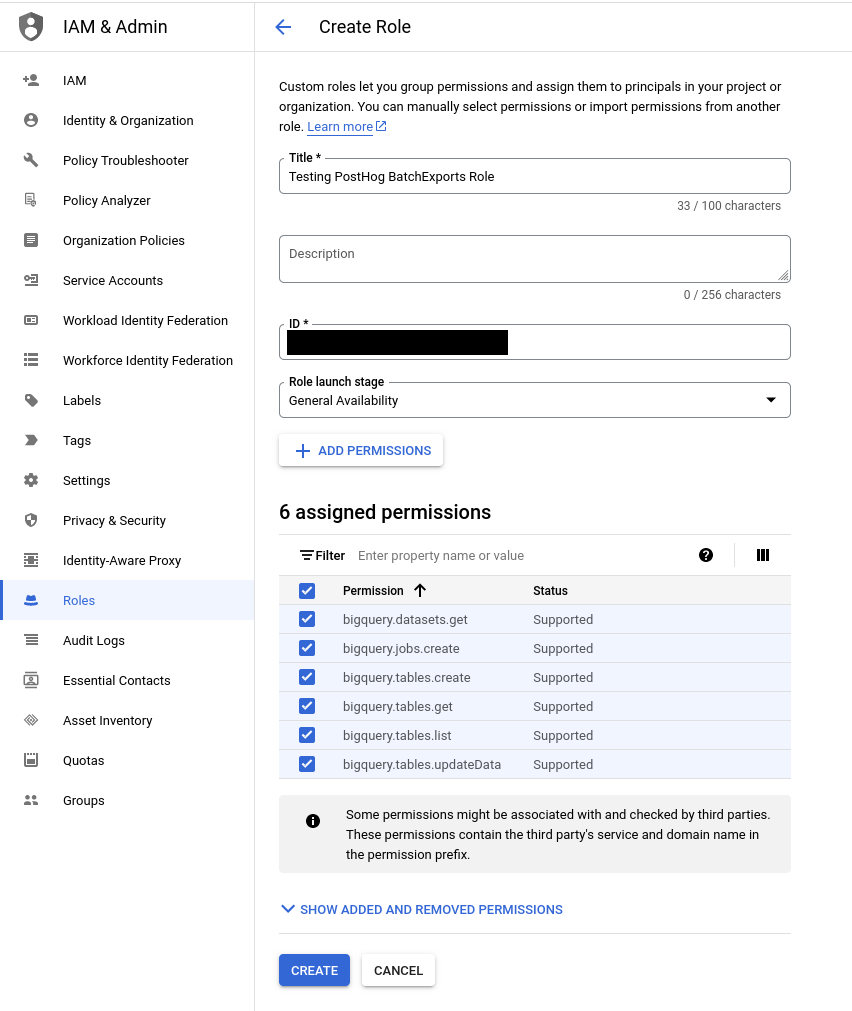

- Create a role which has only the specific permissions the batch export requires (listed below), or use the built in

BigQuery Data OwnerandBigQuery Job Userroles. If you create a custom role, you will need:bigquery.datasets.getbigquery.jobs.createbigquery.tables.createbigquery.tables.getbigquery.tables.listbigquery.tables.updateData

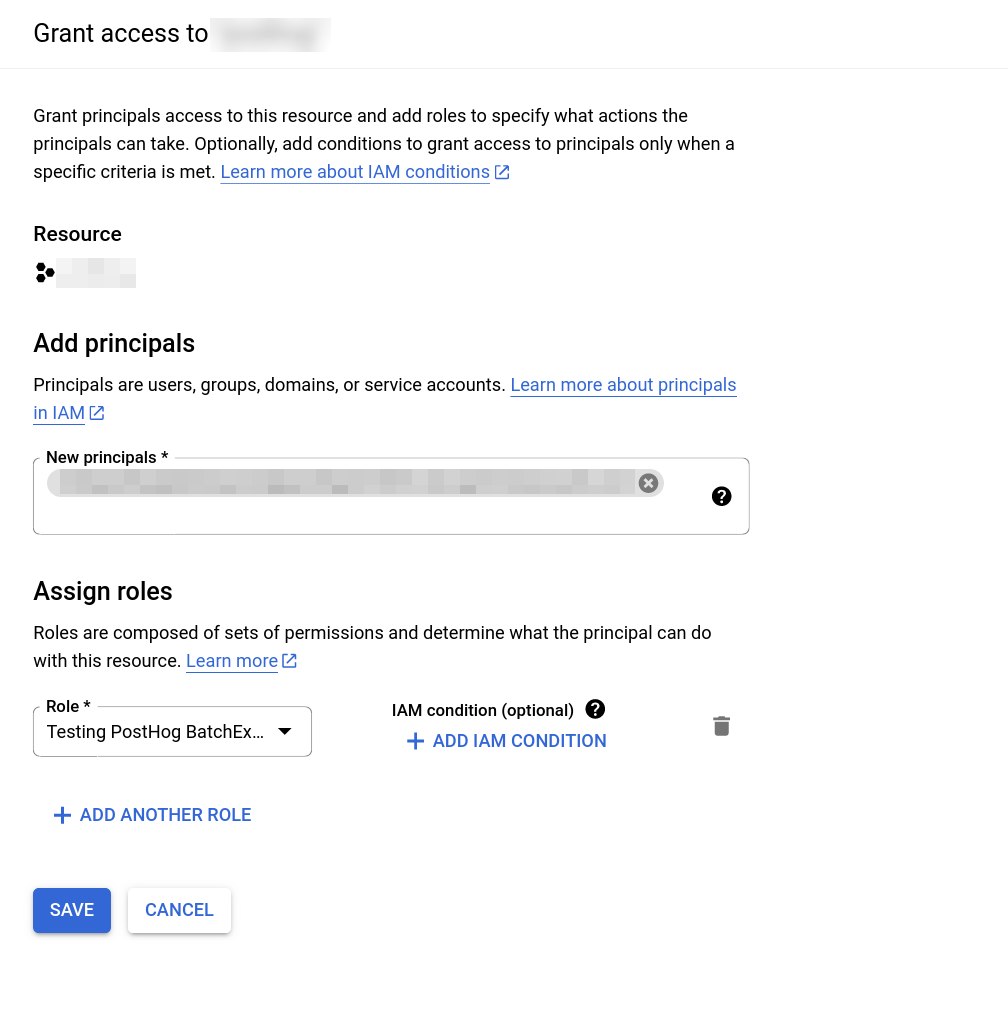

- Grant the Service Account access to run jobs in your Google Cloud project. This can be done by granting the

BigQuery Jobs Userrole or the role we created in the previous step on your project.

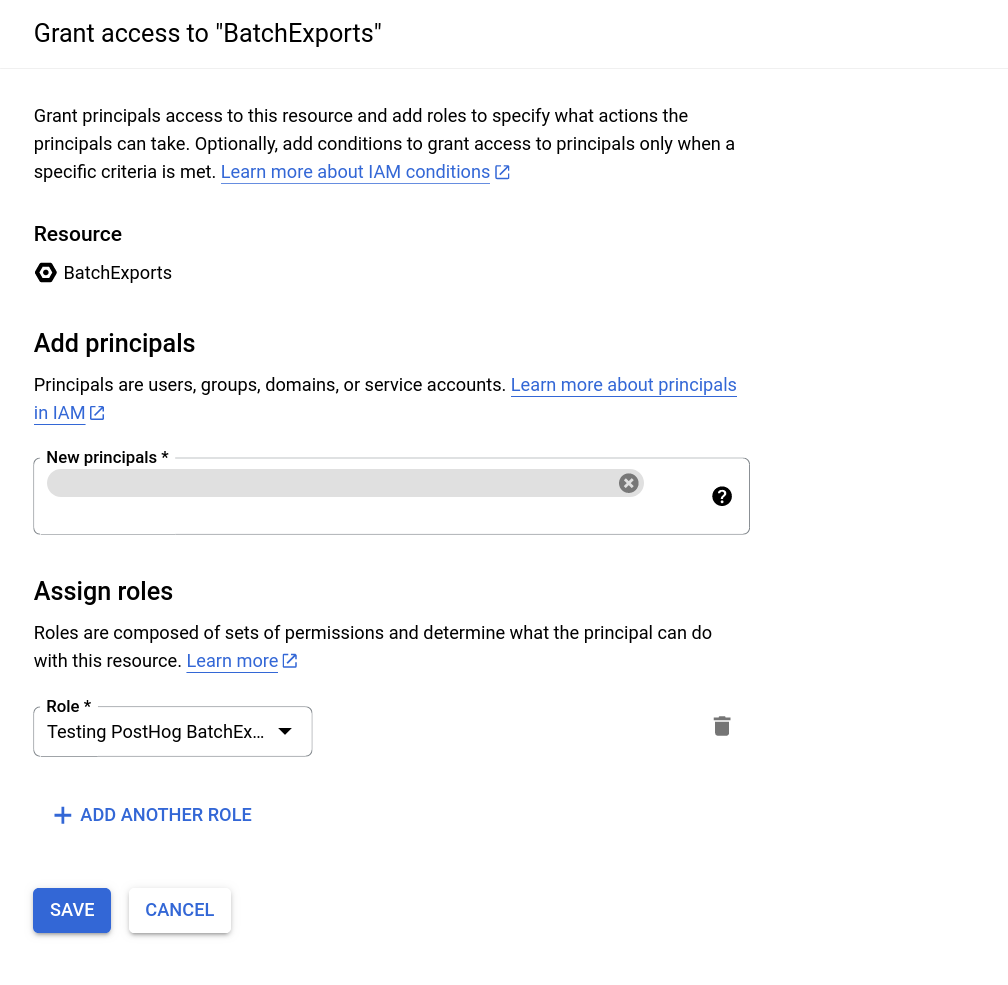

Navigate to IAM and click on Grant Access to arrive at this screen:

In the screenshot above, we have used a custom role named

Testing PostHog BatchExportswith the permissions listed in the previous step.

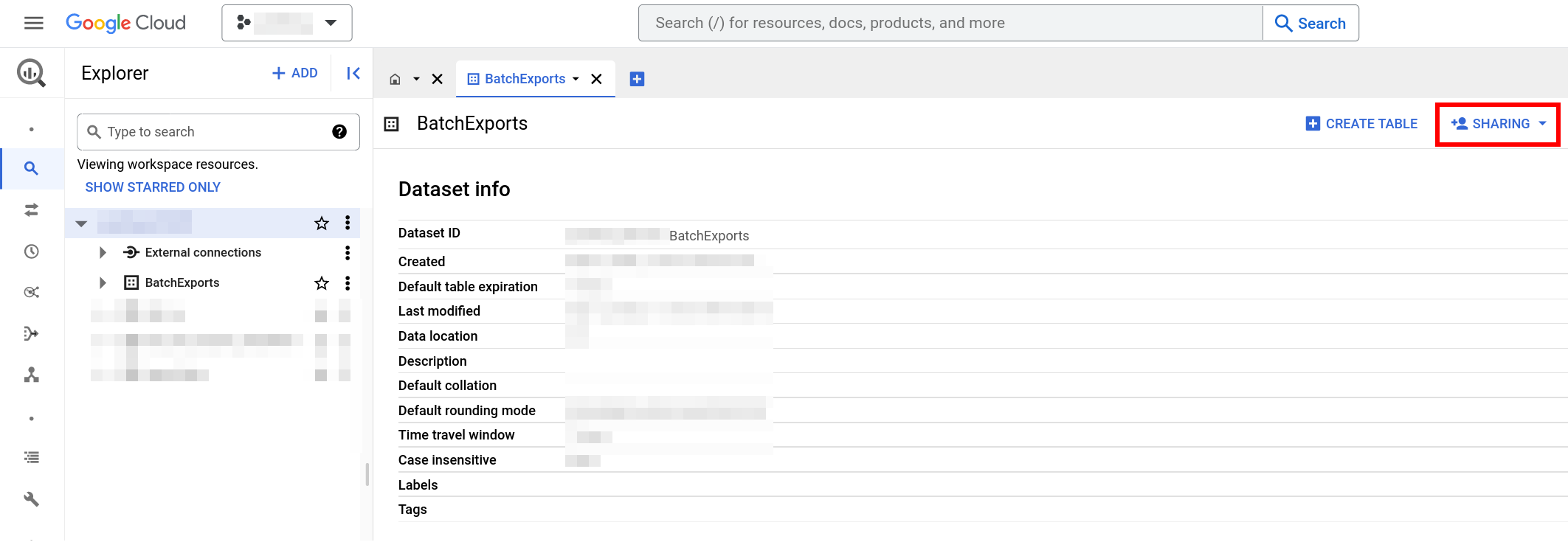

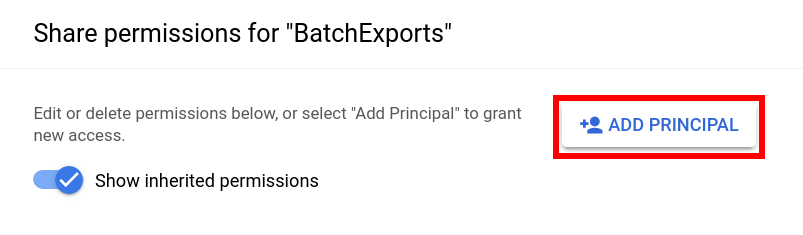

- Create a dataset within a BigQuery project (ours is called

BatchExports, but any name will do). - Use the Sharing and Add Principal buttons to grant access to your dataset with your Service Account created in step 1. Next, assign either the

BigQuery Data Ownerrole or your custom role created in step 4 to provide permissions for the dataset access. Read the full instructions on granting access to the dataset in BigQuery if unclear.

In the screenshot below, we grant our Service Account access to the

BatchExportsdata set and assign theTesting PostHog BatchExportsrole permissions for it.

- All done! After completing these steps you can create a BigQuery batch export in PostHog and your data will start flowing from PostHog to BigQuery.

Event schema

This is the schema of all the fields that are exported to BigQuery.

| Field | Type | Description |

|---|---|---|

| uuid | STRING | The unique ID of the event within PostHog |

| event | STRING | The name of the event that was sent |

| properties | STRING | A JSON object with all the properties sent along with an event |

| elements | STRING | A string of elements surrounding an autocaptured event |

| set | STRING | A JSON object with any person properties sent with the $set field |

| set_once | STRING | A JSON object with any person properties sent with the $set_once field |

| distinct_id | STRING | The distinct_id of the user who sent the event |

| team_id | STRING | The team_id for the event |

| ip | STRING | The IP address that was sent with the event |

| site_url | STRING | The $current_url property of the event. This field has been kept for backwards compatibility and will be deprecated |

| timestamp | TIMESTAMP | The timestamp associated with an event |

| bq_ingested_timestamp | TIMESTAMP | The timestamp when the event was sent to BigQuery |

Creating the batch export

- Navigate to the exports page in your PostHog instance (Quick links if you use PostHog Cloud US or PostHog Cloud EU).

- Click "Create export workflow".

- Select BigQuery as the batch export destination.

- Fill in the necessary configuration details.

- Finalize the creation by clicking on "Create".

- Done! The batch export will schedule its first run on the start of the next period.

BigQuery configuration

Configuring a batch export targeting BigQuery requires the following BigQuery-specific configuration values:

- Table ID: The ID of the destination BigQuery table. This is not the fully-qualified name of a table, so omit the dataset and project IDs. For example for the fully-qualified table name

project-123:dataset:MyExportTable, use onlyMyExportTableas the table ID. - Dataset ID: The ID of the BigQuery dataset which contains the destination table. Only the dataset ID is required, so omit the project ID if present. For example for the dataset

project-123:my-dataset, use onlymy-datasetas the dataset ID. - Google Cloud JSON key file: The JSON key file for your BigQuery Service Account to access your instance. Generated on Service Account creation. See here for more information.